Recent developments in Artificial Intelligence (AI) are introducing new Machine Learning techniques to solve increasingly complicated problems with higher predictive capacity. However, this predictive power comes with an increasing complexity which can result in difficulties to interpret these models. Despite the fact that these models produce very accurate results, there needs to be an explanation in order to understand and trust the model’s decisions. This is where eXplainable Artificial Intelligence (XAI) takes the stage.

XAI is an emerging field that focuses on different techniques to break the black-box nature of Machine Learning models and produce human-level explanations. This black-box represents the models that are too complex to interpret, in other words, opaque models such as the highly-favoured Deep Learning models. Of course, not all Machine Learning models are too complex, there are also transparent models like linear/logistic regression and decision trees. These models can provide some information about the relationship between the feature value and the target outcome which makes them easier to interpret. However, this is not the case for complex models.

As explained by a colleague in a previous blog can we trust AI if we don’t know how it works?, the main reason why we need XAI is to enable trust. It’s important to explain the model’s decisions to be able to trust them. Especially when making a decision based on the predictions, where the results of this decision concern human safety. Another reason XAI is needed is to detect and understand the bias in these decisions. Since bias can be found in many aspects of daily life, it’s not a surprise that it can exist in the datasets used in practice, even the ones that are well established. Bias problems can result in unfairness between discriminative characteristics such as gender or race. This issue is also investigated in the recent documentary on Netflix emphasizing the importance of biased Machine Learning algorithms and their effect on society. XAI can be useful in helping us to detect and understand fairness issues and therefore be able to eliminate them. If you need more convincing, you can find many more reasons that are mentioned in the why of explainable AI.

There is a wide variety of methods used for XAI, but since it is a very new field, there isn’t an established consensus on the terms and the taxonomy yet. Therefore, there can be different perspectives on categorising these methods. Here are 3 different approaches of categorisation:

The first approach is based on the method’s applicability to different models. When we’re able to apply the explanation method to any model after it’s trained, we can call it model-agnostic. As models gain more predictive power, they can lose their transparency, therefore, applying the method after the training process prevents sacrificing the predictive power for interpretability. This can be seen as an advantage when compared to model-specific methods that are limited to only one specific model, however, it doesn’t mean that one is always better than the other.

Model-specific approaches are also called intrinsic methods because they have access to the internals of a model such as the weights in a linear regression model.

Another approach is categorisation based on the scope of the explanation. The explanation might be needed at the instance level or the model level. Local interpretability aims to explain the decisions a model makes about an instance. On the other hand, global explanations aim to answer the question of how the parameters of a model affect its decisions. Therefore, when the model has many parameters, global explainability can be difficult to achieve.

The third approach is categorisation based on the stage that we apply the explainability methods. These stages are pre-modelling, in-modelling and post-modelling (post-hoc). The aim of pre-modelling explainability is more towards exploring and understanding the dataset. For in-modelling, it’s to develop models that are self-explaining or fully observable. Most of the scientific research related to XAI focuses on the post-hoc methods which aim to explain complex models after the training process. Since model-agnostic methods are also applied after training the model, they are post-hoc methods by their nature as well.

To be able to understand the decisions of a model, we need to use an explanation method. One such explanation method is SHAP (SHapley Additive exPlanations). It is a model-agnostic post-hoc explainability method that uses coalitional game theory (Shapley Values) to estimate the importance of each feature. It can provide both local and global explanations that are consistent with each other and has a very nice Python package.

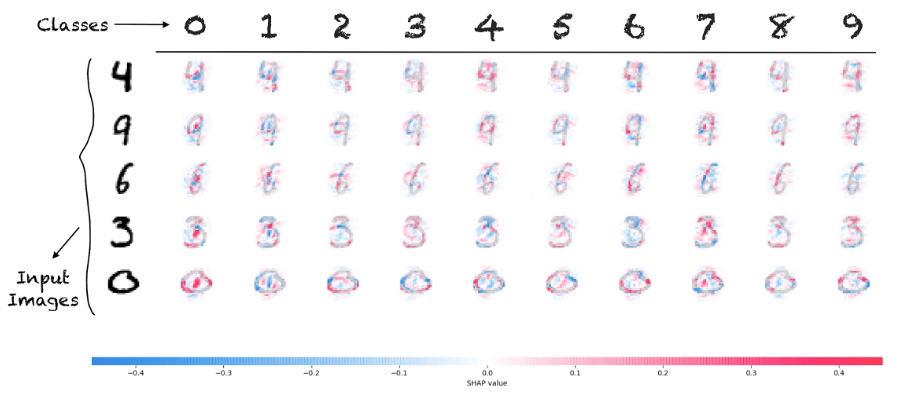

The plot above is an example output of image classification by a 2-layered neural network which is performed on the MNIST dataset and then explained by SHAP. It provides explanations for ten output classes (red-blue digits between 0 and 9) of 5 different input images. The redness/blueness of each pixel represents how much that pixel contributes to the output class; red means positive contribution and blue means negative. When we look at digits 4 and 9, the white pixels on top of digit 4 are contributing the most to label the input as digit 4, because it’s a differentiation factor for digits 4 and 9. For digit 0, white pixels in the middle are very important to classify that digit as 0. For more examples and applications of SHAP, you can check out the GitHub repository.

As Artificial Intelligence is getting more prevalent in our lives, new Machine Learning models appear before we properly understand the underlying structures of current models. It’s important to interpret these models in order to develop more reliable and efficient ones. We need to close the gap between the decisions of a model and the reasons behind it, and SHAP is one of the many options in the field of XAI to do so.

Interested to chat more about this topic?

Want to read more? Download our free white paper on transforming healthcare with reinforcement learning.

Leave your details below and we'll be in touch soon.