Generative AI presents a transformative opportunity for the banking industry, enabling banks to scale operations, drive growth, and enhance customer experiences. However, as banks delve into the implementation of generative AI, they must be aware of the risks and challenges involved. One of the primary concerns that arise while implementing generative AI in banking is about privacy and security of customer data. Banks are trust-led organisations, and being so must ensure that customer information is adequately protected and that AI algorithms adhere to strict data privacy regulations. Another fundamental aspect to be taken into consideration in Generative AI algorithms is their tendency to inadvertently introduce biases in decision-making processes, leading to unfair or discriminatory outcomes.

But there are some operational risks involved as well: Implementing generative AI in banking makes it more susceptible to issues such as system failures, incorrect predictions, or inadequate response handling. And since it is fairly new to the overall public and client base, the adoption of generative AI in customer journeys may initially face resistance or lack of trust from customers. This article explores the strategies and considerations necessary to mitigate risks and successfully leverage generative AI in banking.

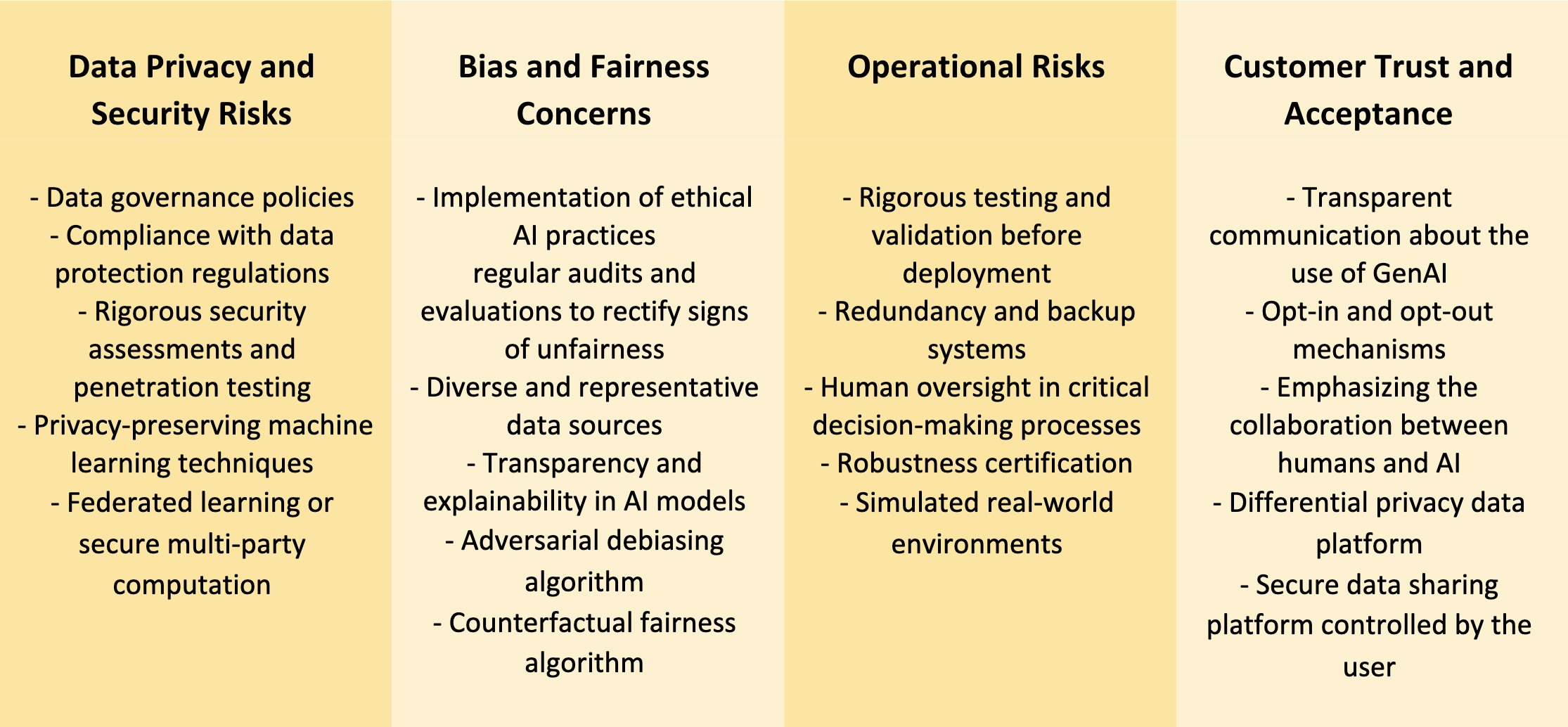

To ensure the privacy and security of customer data, banks must establish robust data governance policies, incorporating encryption, access controls, and regular audits. Compliance with evolving data protection regulations, such as GDPR, is crucial, especially regular policy reviews and revisions. Additionally, rigorous security assessments and penetration testing should be conducted to identify and address vulnerabilities, protecting sensitive customer information and preventing data breaches.

HSBC, a leading international bank, implemented robust data governance policies, including encryption, access controls, and regular audits, to protect customer data and ensure compliance with regulations like GDPR, mitigating data privacy and security risks associated with generative AI implementation. Additionally, it could be relevant for banks to explore privacy-preserving machine learning techniques, such as federated learning or secure multi-party computation, to preserve data privacy and security.

Mitigating biases in generative AI algorithms requires the implementation of ethical AI practices. Fairness and bias mitigation techniques should be employed during development and training, with regular audits and evaluations to rectify signs of unfairness. Using diverse and representative data sources is essential to minimise biased entries, while transparency and explainability in AI models enable clear explanations of decision-making factors, fostering trust among customers.

Bank of America has been employing ethical AI practices by implementing fairness and bias mitigation techniques during the development and training of their generative AI models. They also prioritise using diverse and representative data sources and continuously monitor the performance of their models to identify and correct any biases that may arise. Moreover, Bank of America emphasises transparency in their AI models, providing clear explanations of the factors and variables that influence AI-driven decisions to enhance trust among customers.

A next generation bias and fairness approach should consider leveraging cutting-edge algorithms like adversarial debiasing or counterfactual fairness to proactively address biases.

To address operational risks, generative AI models must undergo rigorous testing and validation before deployment. Implementing redundancy and backup systems ensures business continuity in the event of system failures. Human oversight should be maintained in critical decision-making processes, allowing human experts to review and validate AI-driven outputs. This combination of testing, redundancy, and human collaboration mitigates operational risks associated with generative AI implementation.

Citibank has been managing risks associated with generative AI by implementing rigorous testing and validation procedures for their AI models. They conduct extensive stress testing to evaluate the performance of generative AI models under various scenarios, identifying any weaknesses or limitations beforehand. Additionally, Citibank has established redundancy and backup systems, ensuring continuity of operations in case of AI system failures. They have implemented failover mechanisms, data backups, and comprehensive disaster recovery plans to minimise disruptions and customer impact. Moreover, Citibank maintains a high level of human oversight and intervention in critical decision-making processes, with human experts reviewing and validating AI-driven outputs, particularly in areas such as fraud detection or credit approvals. This combination of rigorous testing, redundancy measures, and human oversight has allowed Citibank to mitigate operational risks and ensure the smooth integration of generative AI in their banking operations.

More advanced testing methodologies like robustness certification or simulated real-world environments would accelerate banks’ capacity to uncover vulnerabilities and enhance the reliability of generative AI operational risks.

Building trust and acceptance among customers requires transparent communication about the use of generative AI, its benefits, and safeguards for data privacy and security. Offering opt-in and opt-out mechanisms empowers customers to control their engagement with AI-driven services. Emphasising the collaboration between humans and AI, highlighting the role of human experts alongside generative AI technologies, further fosters trust and acceptance.

JPMorgan Chase has employed transparent communication to educate their customers about the use of generative AI, emphasising its benefits and the measures taken to ensure privacy and security. The bank also provides opt-in and opt-out mechanisms, allowing customers to have control over their engagement with AI-driven services, respecting their preferences and fostering trust. Additionally, the bank emphasises human collaboration by highlighting the partnership between human experts and generative AI technologies. This approach increases their customers' trust that AI is designed to assist and enhance human capabilities rather than replace human interactions entirely.

Technologies like differential privacy or secure user-controlled data sharing platforms can enhance customer trust even further by providing advanced privacy protection and personalised control over data usage.

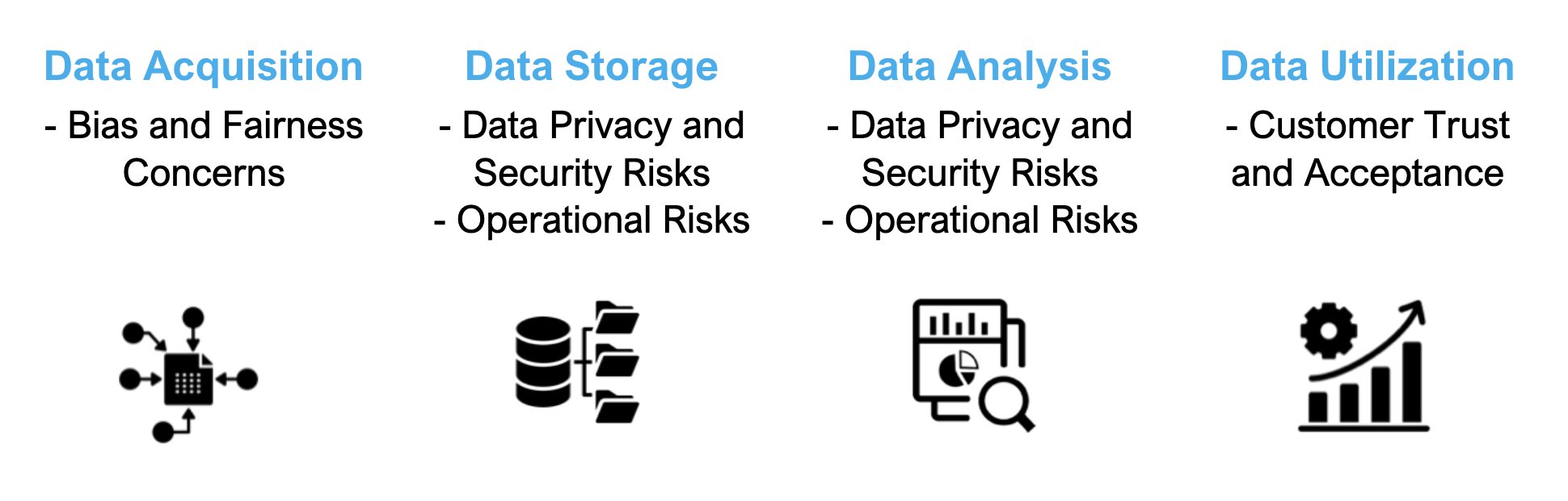

Generative AI use starts from the idea of leveraging data usage. For that, we assume that the data management processes should be taken into consideration. Indeed, if we plot the processes of Data Acquisition (capturing and gathering data from various sources), Data Storage (determining appropriate storage mechanisms and structures for efficient data storage), Data Analysis (data analytics, data mining, and visualisation techniques to derive meaningful information from the data) and Data Utilisation (exploration and utilisation of data for decision-making and insights generation) as an end-to-end approach, we see correlation between the risks identified and each one of these processes:

The implementation of generative AI in the banking industry offers transformative opportunities but also presents various risks and challenges. Mitigating those is paramount to bring the best use of this trend into daily operations. For data privacy and security, the main takeaways are to establish robust data governance, ensure compliance with data protection regulations and conduct security assessments and penetration testing. By following HSBC's example, banks can protect customer data and mitigate data privacy and security risks associated with generative AI implementation.

To maximise ethical AI practices and address biases in generative AI algorithms, banks need to employ fairness and bias mitigation techniques during development and training, use diverse and representative data sources, and promote transparency and explainability in AI models, like Bank of America's approach demonstrates.

Minimising operational risks comes from rigorous testing and validation of generative AI models before deployment and redundancy and backup systems. Citibank’s example brings up the importance of maintaining human oversight in critical decision-making processes, allowing experts to review and validate AI-driven outputs. Increasing customer trust and acceptance, transparency about the use of generative AI, and ensuring users of the presence of safeguards for data privacy and security, as well as providing the opportunity for opt-in and opt-out mechanisms make it more friendly. JPMorgan Chase's emphasis on transparent communication and customer control exemplifies how this can foster trust and acceptance.

By considering these actionable insights, banks can navigate the risks associated with generative AI implementation and leverage its transformative potential in the banking industry. It is crucial for banks to address these risk management strategies for each part of their data management processes to ensure comprehensive and effective risk mitigation throughout the entire lifecycle.

Struggling to identify where and how to incorporate generative AI into your business strategy? Mobiquity's DECODE AI immersive 1-day ignition workshop helps you to delve deeper into the possibilities of gen AI and gain valuable insights along with actionable results. Walk away with a tailored portfolio of gen AI opportunities for your business, along with a detailed execution plan to drive real business value.